Serverless is an incredibly powerful tool to have in your arsenal. It allows you deploy an entire stack with a single command:

$ sls deploy -s dev

(in which dev is the environment you want to deploy to)

CloudFront is an AWS technology which ensures your API is highly available, and it’s caching ability means that, once an endpoint has been called, all identical future calls won’t even go to the lambda (for as long as the cache is alive), the cache will do all the work for you.

What you will create in this tutorial:

- A deployed Lambda — Where your code will run. This will sit behind an API Gateway, but you don’t need to worry about that.

- A CloudFront Distribution with a cache — this makes sure your service is available worldwide, with very little latency. It also manages the cache.

In this article, I’ll take you through each step to create a fully deployed stack. But there are a couple of things you need to take care of before. You’ll need:

- An AWS account, with billing set up (again — charges may be incurred)

- Your favourite terminal

- A delicious text editor

- Serverless (installation instructions in link)

- AWS CLI (setup and configured)

Got all that? Awesome let’s go!

Taking our first steps

We need to create a serverless project.

$ sls create --template aws-nodejs --path my-service

$ cd my-service

Done. Pat on the back for you. 👏

Step the second

Now’s a good time to edit your handler if you want to. This is where all the Lambda logic happens. The handler is found in handler.js (funnily enough). And by default will just return some JSON. Feel free to change this code to do whatever you like. For this tutorial I’m just leaving it as it is.

Once you’re happy, open up that most enticing of files, serverless.yml .

Cut out all of that commented code. While it may come in handy in the future, we’re doing everything from scratch now. Once you’re done, you should have something that looks like:

service: my-serviceprovider:

name: aws

runtime: nodejs6.10functions:

hello:

handler: handler.hello

Great!

We can leave the provider section alone — there are more configurable properties, such as default stage , end endpointType (this defaults to EDGE , which means that the lambda will be distributed worldwide, reducing latency).

St3p

That functions section is looking awfully bare. Let’s add the following properties, alongside the handler property:

description: Some text describing the Lambdaevents: This will contain the events that will trigger the lambda

In the events section put the following:

- http:

path: my/cool/path

method: get

This means the lambda will be triggered when an HTTP GET request is made to <domain>/my/cool/path.

Top notch stuff. So now your serverless.yml should look a little something like:

Fabulous!

Step Quatro

So let’s try deploying. Just one more time for those that haven’t been paying attention…

WARNING: This may cause charges to your AWS account

$ sls deploy -s dev

(see the Serverless Docs for information on this command)

Now this is going to take a few minutes. So go and grab that well earned ☕️.

Oh wow! You’re back already? And everything is completed and worked perfectly!? (If not — I find this website really useful for solving problems).

So you’ll be looking at a screen that looks a little like this:

All sort of useful information there. But the bit you’re dying to try out is the endpoints right? Go ahead!

Curl that baby ($ curl -v <your url>*) and you’ll see a whole load of JSON appear, and at the top (with any luck):

message: "Go Serverless v1.0! Your function executed successfully!

If you want your JSON to look all pretty, consider using JQ.

So there you have it, you’re a serverless deity. Without even breaking a sweat.

Ok so we have our lambda up and running, responding to HTTP requests like a pro. So what else is there to do?!

You’ll notice in the above step a -v flag on the curl command. That’s so we can see all those amazing and informative headers. But there’s one in particular that we’re interested in:

x-cache: Miss from cloudfront

And you’ll see that there on every request. Boo.

That means that our lambda is being called every time!? But we return the same data, so what’s the point in using all that expensive Lambda computing power? Let’s just cache it at a CloudFront level, and let our Lambda put it’s feet up. 😎

5tep

To enable caching is a little more complex than what we’ve been doing. But not so complex that you’ll leave this tutorial.

Please remember: YOU ARE NOT A QUITTER!

Glad we’ve got that cleared up. With that in mind, let’s look at the following YAML.

Let’s break that down before it makes us break down.

There are two main sections as I see them:

Section 1 — Type

resources:

Resources:

CloudFrontDistribution:

Type: AWS::CloudFront::Distribution

The first simply declares a Resource, in this case, our CloudFront Distribution. Which is where the cache will live.

CloudFrontDistributionis the name of our CloudFront Distribution, we can call itJoeSchosSuperDistributionif we wanted to.Type: AWS::CloudFront::Distributiontells AWS what it needs to create. There are many different types of these, but they are pretty well documented by AWS.

Section 2 — Properties

Hmm.. Indeed.. I see.. Yes… I could try to explain each one of these, but to be honest, AWS’ docs are good enough to explain themselves. Take a look.

At the root level:

Comment— Just a human readable comment so you know what the distribution is for.DefaultCacheBehaviour— How you would like the cache to behaveEnabled— Will the distribution accept and respond to HTTP requests?Origins— The location of the URL from which to cache data

Now we have a better understanding of what all that means 😅 let’s add it to our serverless.yml file, just below everything we already have there.

So what you have now should look a little something like this:

Note: The us-east-1 above can be changed to whichever region you are deploying to. Or can be done ad-hoc using serverless-pseudo-parameters.Time to deploy! (again)

$ sls deploy -s dev

So now, instead of calling the URL we did earlier (the API Gateway URL), we are going to use a different URL which points to the CloudFront Distribution.

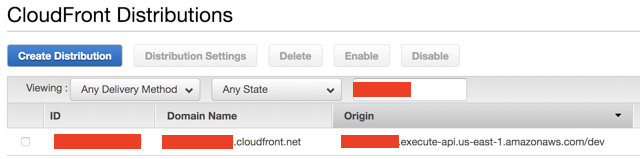

To find this, copy the <some_ID> part of the previous URL. And let’s go to the AWS Console. From here, search for CloudFront. Following that, search for the <some_ID> part of the previous URL in the query box. There should be only one result, that looks a little something like this:

The part we’re after is the Domain Name (the part that ends with .cloudfront.net), so copy that!

$ curl -v <your cloudfront domain>/my/cool/path | jq

Notice the removal of the /dev part of the URL. CloudFront does not use this, as there will be a different CloudFront Distribution per environment.Check those headers — you should see the same as before:

x-cache: Miss from cloudfront

Don’t worry! That’s expected. However, while it looks the same to you, some MAGIC has happened behind the scenes. Your CloudFront Distribution has cached that response, and will send it to any requests to that same URL. Without even trying to wake up your Lambda! (Well, for 30 seconds anyway).

After 30 seconds, the cache will forget that response and call the Lambda for the next call, then cache the response, and forget it 30 seconds later... And so on…

Obviously you can increase the time to suit your needs (using the `DefaultTTL` property)

So, let’s hit the endpoint again (if it’s been more than 30 seconds since you last called it, do it twice), and check the headers again.

With any luck, you’ll see:

x-cache: Hit from cloudfront

It worked! CloudFront responded to that request, without any communication from the Lambda. Magic.

An end to the madness

So let’s think about what we’ve created.

We created a Lambda using Serverless. This is a function that will run only when the HTTP endpoint specified is called. That was super simple.

We also created a CloudFront Distribution, with caching enabled. This ensures that our Lambda is available worldwide with a very low latency. Plus the cache means that we don’t use processing power to return the same data again and again.

Amazing, well done you!

Oh, one more thing! If you’d like to destroy everything you’ve on AWS, simply run the following command:

$ sls remove -s dev

While this should tear down everything you’ve created. It might be a good idea to just comb over your AWS account and double check there are no leftovers to avoid being charged!

🎉👏🔥🙌